Many AIoT initiatives start with excitement around machine-learning models, edge intelligence, and automation. Yet, when these systems move from demos to real-world deployment, most failures don’t happen at the AI layer.

They happen because the data pipeline is weak.

In production systems, intelligence is only as reliable as the data flowing from sensors to decisions. At MetaDesk Global, we’ve seen repeatedly that AIoT projects that ship successfully focus less on hype — and more on building a robust, end-to-end data foundation.

The Hidden Problem in AIoT Projects

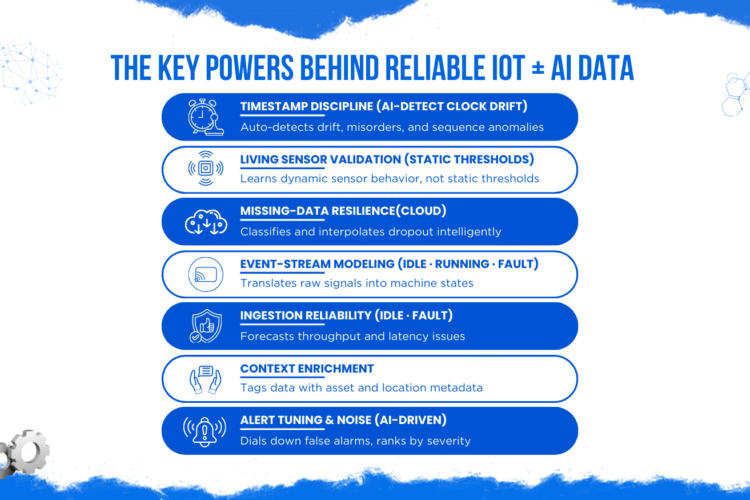

AIoT is often marketed as an AI challenge. In reality, it’s a systems engineering challenge. Teams invest heavily in model training while overlooking:

- Inconsistent sensor readings

- Latency and packet loss

- Unstructured or noisy data

- Poor edge-to-cloud coordination

Without a reliable pipeline, even the best models produce unreliable results.

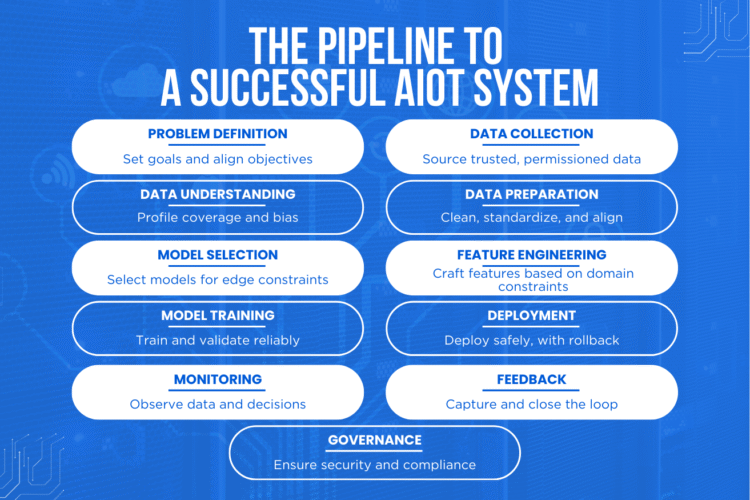

The AIoT Data Pipeline — From Sensor to Decision

A production-grade AIoT system is built in layers. Each layer must be reliable, scalable, and observable.

Sensor Data Generation

Every AIoT system begins at the physical layer. Sensors capture raw signals such as:

- Temperature and humidity

- Motion and vibration

- Audio, images, or equipment telemetry

The quality of these signals defines the upper limit of system intelligence. Poor sensor placement, calibration, or sampling strategy cannot be fixed later with AI.

Secure Data Ingestion

Once data is generated, it must move reliably. Protocols like MQTT, CoAP, and HTTP enable secure, low-latency transport from devices to backend systems.

Identity management, authentication, and message integrity are essential — especially in distributed deployments. This layer ensures data arrives on time, intact, and attributable to the correct device.

Edge Preprocessing & Intelligence

Sending everything to the cloud is inefficient and often impractical. At the edge, systems:

- Filter noise

- Normalize and compress data

- Detect local events

- Reduce bandwidth usage

- Respond in real time

Edge preprocessing is critical for latency-sensitive and low-power systems, allowing devices to remain functional even with limited connectivity.

Cloud Storage & Stream Processing

In the cloud, data is transformed into usable information. This includes:

- Scalable storage (databases or data lakes)

- ETL pipelines for cleaning and structuring data

- Stream processors for real-time analytics

A well-designed cloud layer ensures data is searchable, reproducible, and ready for AI workflows.

AI & Machine Learning Models

Only after the pipeline is stable does AI deliver value. Models can now:

- Predict equipment failures

- Detect anomalies

- Optimize energy usage

- Build digital twins of physical systems

At this stage, AI becomes reliable because it operates on clean, consistent, and timely data.

Visualization, Automation & Decisions

The final layer turns insight into impact. Dashboards, alerts, and automated workflows allow businesses to:

- Monitor operations in real time

- Trigger corrective actions

- Enable self-healing systems

- Measure performance and ROI

This is where AIoT stops being technology — and becomes a business asset.

Why Strong Data Pipelines Beat Better Models

AI models can be retrained. Data pipelines must work every second, on every device. Teams that succeed in AIoT focus on:

- Observability and logging

- Fault tolerance and retries

- Versioned data schemas

- Edge-cloud coordination

- Long-term maintainability

The result is not just smarter systems — but deployable, scalable products.

How MetaDesk Global Builds Real-World AIoT Systems

At MetaDesk Global, we design AIoT systems from the ground up — starting at the sensor and ending at decision-making.

- Embedded firmware & edge computing

- Secure IoT communication stacks

- Data engineering & cloud pipelines

- AI-ready system architecture

- Industrial and production-grade deployments

We don’t build demos. We build systems that ship, scale, and survive in the real world.

Final Thoughts — Build Data First, Then Intelligence

AIoT isn’t magic. It’s disciplined engineering across hardware, firmware, data, and intelligence.

If your roadmap includes intelligent automation, predictive systems, or industrial AI, start by asking one question:

Is your data pipeline strong enough to support real decisions?

If the answer is no — that’s where the real work begins.